Quantum Machine Learning: from Zero to Hero

In this post, you will learn how to build a Machine Learning pipeline introducing Quantum algorithms to solve a traditional Machine Learning problem. Besides, a benchmarking between the Quantum implementations and the equivalent classical algorithms has been performed in order to evaluate the state of the art of this new trend in Machine Learning.

As any Machine Learning pipeline, everything starts with a problem to solve. In this case, based on a dataset containing houses in Spain it is required to figure out if a house has a swimming pool or not, based on features like the square meters, the year of built, the province… It is a dummy problem, only for educational purposes.

TL;DR

If you are not into reading long posts with line-by-line explanations, you can download the jupyter notebook containing all the code needed to run a Quantum pipeline here.

However, if you want to spend some time being evangelized with the last Machine Learning trends, please continue reading and get yourself a cup of coffee!

Data loading & transformation

The data will be retrieved from one of the main data platforms in Spain: DataMarket. The dataset is called “Catastro” and the data used in this analysis can be downloaded from this link. A detailed documentation about this dataset can be consulted here.

import pandas as pd data_url = 'https://datamarket.es/media/samples/catastro-sample.csv' df = pd.read_csv(data_url)

After the data has been loaded, it should be prepared for the traditional Machine Learning pipeline. This step should not be removed either a Quantum or a Classical approach is chosen. Among the operations that will be applied at this stage:

Selection of feature and target columns.

Null values cleanup.

Dataset balance as there are much more houses without swimming pool.

Encoding of categorical values (Quantum Machine Learning is not as sexy as LightGBM yet).

Feature standardization.

Train-test split.

You can have a look at all this data transformations in the following notebook.

Data modeling

Once the X_train, X_test, y_train, y_test quartet is ready for the action, four Machine Learning models are going to be tested: two Quantum based and two traditional ones for the binary classification problem of whether a house has a swimming pool or not.

Quantum approach

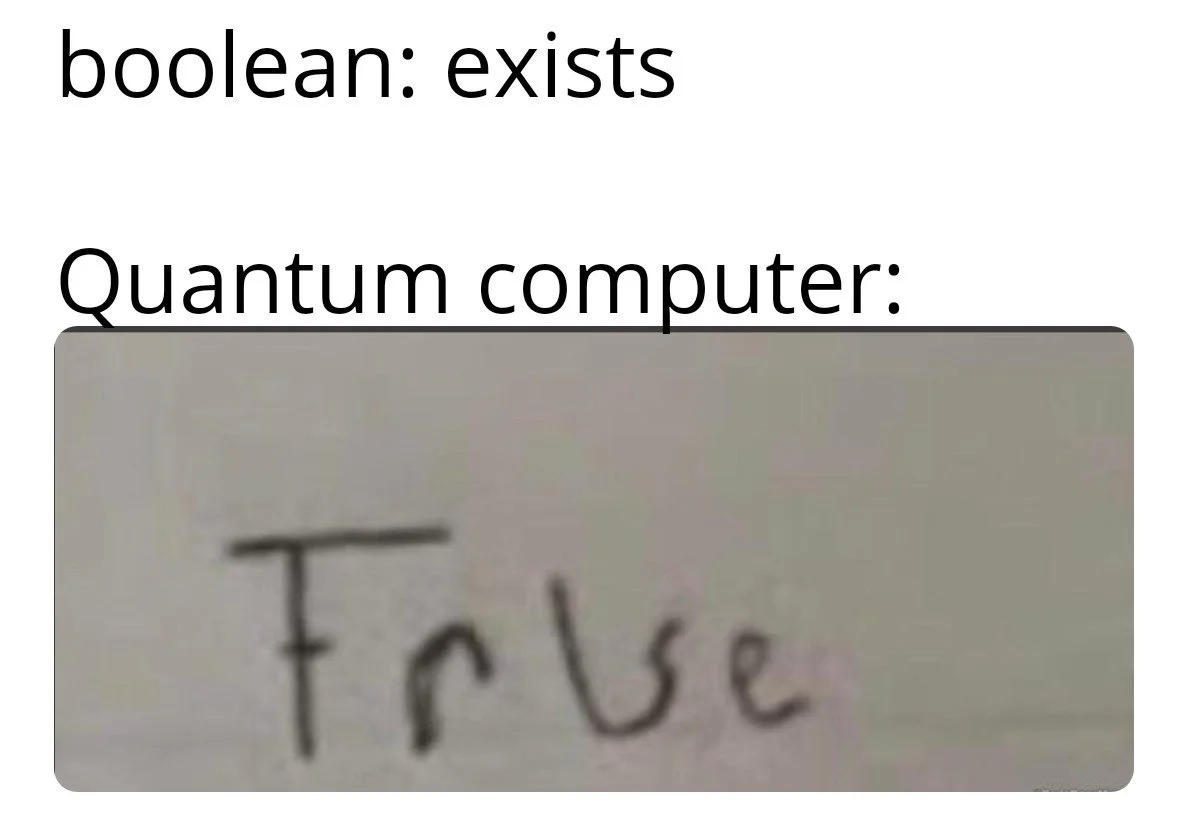

Introduction

Before starting, a very quick summary of what Quantum Machine Learning (QML) is should be done. QML allows to perform computations “in a Quantum way”, not following the traditional standard of 0s and 1s that rules the computers nowadays. Instead, it performs operations based on probabilities between 0 and 1. However, this probability approach can only be achieved on a special hardware called “Quantum Computer” and there are only a few in the world.

Thus, in order to run a QML algorithm, either a Quantum Computer or a “Quantum Simulator” are needed. This simulator concept represents a traditional computer emulating the way the quantum operations would be performed on real Quantum hardware, but with the advantages of being run on any laptop. This will be the choice for running all the Quantum models in this post.

Something about the Quantum based models has to be said: they are not ready for medium or large datasets (at least at the beginnings of 2022). More than 10K rows or 5 features could get your laptop into trouble, at least on the simulator.

Installation

The Quantum library that has been chosen for this analysis is Qiskit. It can be easily installed as a Python library:

pip install qiskit-machine-learning

Once the library is installed, all the code that will be explained in the following sections should be run without any issues.

Quantum instance

The first step in the Quantum Machine Learning pipeline is defining the backend (a Quantum Computer or a Quantum Simulator) where the computation is going to happen. For this case, a Quantum Simulator has been chosen and it can be instantiated with the code below:

from qiskit import BasicAer

backend = BasicAer.get_backend('statevector_simulator')If the computation wants to be sent to a real Quantum Computer, the following piece of code could achieve that, but an IBM account is needed for the code to work properly. The IBM account can be created here.

from qiskit import IBMQ provider = IBMQ.load_account() quantum_computer_id = 'quantum_computer_id' backend = provider.get_backend(quantum_computer_id)

Once the backend has been set up, the common starting point for all Quantum models is the Quantum Instance. It can be instantiated with the code below:

from qiskit.utils import QuantumInstance quantum_instance = QuantumInstance(backend)

The next thing to define is the number of qubits (the equivalent of binary bits) to use. The best approach is take as much qubits as features the model has:

num_qubits = len(feature_cols)

Before starting with the QML setup, it needs to be mentioned that, for each kind of problem the Quantum Computing tries to solve, a “Quantum Circuit” is required. The Quantum Circuit can be seen as a combination of Quantum Gates, measurements... (the same way as the traditional circuits are built). Depending on the circuit architecture, some may be more appropriate for solving certain types of problems as SVMs, others are optimized for Neural Networks or from a non Machine Learning point of view, some specialized circuit architectures for optimization algorithms.

QSVC: Quantum Support Vector Classifier

Now it is time to setup a QSVC model. The only difference between the Quantum implementation and the classical SVC is the kind of kernel, in this case a Quantum one. In order to create an instance of this kernel a “Feature Map” has to be created:

from qiskit.circuit.library import ZFeatureMap feature_map = ZFeatureMap(feature_dimension=num_qubits)

It essentially is one of the Quantum circuits that have proven the most efficiency at the time of implementing SVM algorithms. Once the Feature Map is set, the Quantum kernel is defined as:

from qiskit_machine_learning.kernels import QuantumKernel qkernel = QuantumKernel(feature_map=feature_map, quantum_instance=quantum_instance)

If you are familiar with the scikit-learn syntax, the last step is very similar in syntax to any classical model training:

from qiskit_machine_learning.algorithms import QSVC qsvc = QSVC(quantum_kernel=qkernel) qsvc.fit(X_train, y_train)

Now the model is trained and saved into the qsvc variable. It implements all scikit-learn methods like .predict(), .score() so, from now on, the Quantum code has finished.

QNNC: Quantum Neural Network Classifier

This is another Quantum algorithm that the Qiskit library implements, however it is inspired on a Neural Network architecture instead of a SVM. One of the most popular circuits implementations is the following:

from qiskit_machine_learning.neural_networks import TwoLayerQNN qnn_architecture = TwoLayerQNN(num_qubits, quantum_instance=quantum_instance)

Before instantiating the Quantum model, a callback function can be defined so that some info about the training process could be plotted on the screen on each iteration:

import matplotlib.pyplot as plt

from IPython.display import clear_output

def callback_graph(weights, obj_func_eval):

clear_output(wait=True)

objective_func_vals.append(obj_func_eval)

plt.title('Objective function vs iteration')

plt.xlabel('Iteration')

plt.ylabel('Objective function')

plt.plot(range(len(objective_func_vals)), objective_func_vals)

plt.show()This function will plot a live graph with the objective function evaluation after each iteration. Now it is time to create the Quantum Neural Network Classifier:

from qiskit_machine_learning.algorithms.classifiers import NeuralNetworkClassifier from qiskit.algorithms.optimizers import COBYLA qnnc = NeuralNetworkClassifier(qnn_architecture, optimizer=COBYLA(maxiter=50), callback=callback_graph)

Before launching the training process, the target of the Machine Learning pipeline (if the house has a swimming pool) should be changed from the actual classes (0, 1) to the classes (-1, 1), in order to properly train the QNNC algorithm.

objective_func_vals = [] y_train_qnnc = y_train.copy() y_train_qnnc[y_train_qnnc == 0] = -1 qnnc.fit(X_train, y_train_qnnc.values)

As the QSVC, the QNNC implements the same methods and they will be useful at the time of getting the classification report.

Classical approach

Nothing to say here, the training process of the classical implementations has been detailed just for benchmarking purposes. Both SVM and a Neural Network based models has been chosen to perform a more direct comparison.

SVC: Support Vector Classifier

from sklearn.svm import SVC svc = SVC() svc.fit(X_train, y_train)

MLP: MultiLayer Perceptron

from sklearn.neural_network import MLPClassifier mlp = MLPClassifier() mlp.fit(X_train, y_train)

Benchmarking

After evaluating the four models, the results can be seen below:

QSVC report:

precision recall f1-score support

0 0.70 0.65 0.68 114

1 0.72 0.77 0.75 136

accuracy 0.72 250

macro avg 0.71 0.71 0.71 250

weighted avg 0.72 0.72 0.71 250

QNNC report:

precision recall f1-score support

0 0.43 0.46 0.45 114

1 0.52 0.49 0.51 136

accuracy 0.48 250

macro avg 0.48 0.48 0.48 250

weighted avg 0.48 0.48 0.48 250

SVC report:

precision recall f1-score support

0 0.72 0.58 0.64 114

1 0.70 0.81 0.75 136

accuracy 0.70 250

macro avg 0.71 0.69 0.69 250

weighted avg 0.71 0.70 0.70 250

MLP report:

precision recall f1-score support

0 0.69 0.66 0.68 114

1 0.73 0.76 0.74 136

accuracy 0.71 250

macro avg 0.71 0.71 0.71 250

weighted avg 0.71 0.71 0.71 250The conclusions after getting the results are:

QML has a very large training and execution times (at least in simulators) and it can handle only little samples of data. As the number of rows or features increase, the amount of RAM needed for computation grows exponentially.

The performance metrics of QSVC and the traditional approaches are quite similar, being QSVC a bit better than the rest (at least for the chosen sample).

The number of training iterations of QNNC were limited due to long computational times, and it also explains the low performance of this model.

I hope you have found this post useful and if you ever had any doubts please write an email to pedro.munoz@whiteboxml.com and I will be glad to help you. The code that has been used to create this post can be downloaded here.